Early History of AI

-

-

history -

ai

Intro

Computers are now doing some of the things that humans do when we say “we are thinking”. I am convinced that machines will think in our lifetime and they already are .. sort of thinking. Below the algorithmic level, I know there is something we are overlooking as humans, something we are denying, “but they are just computers, they can never think like us”. Maybe computers will never think like us, maybe we will outsource our thinking to the extent that there will be no distinction between man and machine in terms of output.

The history of thinking machines dates back to The year is 1939, Nazi Germany deploys the Enigma, an encryption machine believed to be unbreakable. The Allied forces face a new challenge and turn to Alan Turing, a visionary mathematician who then envisioned a new kind of machine, which kickstarted the era of computing.

1947

By 1946, every military in the world understands the power of computers and wants one of their own. The problem is efficiency, computers rely on vacuum tubes, which work like giant light bulbs. They require constant maintenance and manual labor, and some military computers grow to the size of entire warehouses. So scientists begin searching for a better way. a brilliant physicist enters the picture. His name is William Shockley. He imagines powering computers using the element germanium to create semiconductors. A semiconductor sits between conductors like metal and insulators like rubber. It can do both, allowing it to act as an electrical switch [0, 1]. This invention becomes known as the transistor and changes technology forever. Though its applications are still theoretical, the smartest minds in the world immediately see the future. A transistor, no bigger than a kernel of corn, sparks visions of super computers.

1950

In 1950, Alan Turing introduces the Imitation Game, later called the Turing Test. It is designed to determine whether a machine can exhibit intelligent behavior indistinguishable from a human. The test fundamentally questions the limits of artificial and human intelligence.

Universities such as Harvard, MIT, and Princeton begin offering computer science degrees. Computer engineers become some of the highest paid skilled workers in the world. The smartest people flock to these programs.

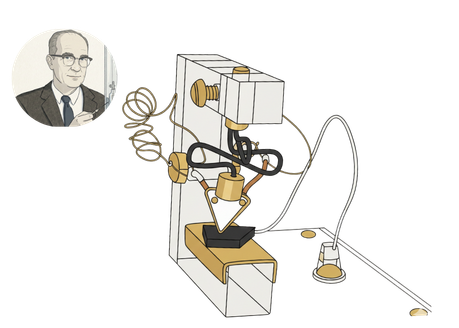

One of them is Marvin Minsky, a 23 year old PhD candidate at Princeton University. Inspired by Turing’s work, Minsky builds the world’s first working neural network using wires and six vacuum tubes as synapses. Despite frequent failures, the machine works and lays the foundation for artificial intelligence and neural networks. Shockley’s transistor leads to the transistor radio, which becomes the highest selling consumer electronic device of all time. The world gets its first taste of compact electronics. Shockley leaves Bell Labs and starts Shockley Semiconductor in Palo Alto, making it one of the earliest tech startups in Silicon Valley. He recruits bright minds such as Gordon Moore and Robert Noyce, along with twelve other geniuses.

1956

Just months after Shockley received the Nobel Prize, eight of the men he recruited left and founded Fairchild Semiconductor. Fairchild quickly became profitable, selling silicon-based transistors to IBM for military navigation systems. This proved that silicon was far superior to germanium, with profit margins nearly 30 times higher.

Manufacturing Crisis

The military now needs thousands of transistors, but manufacturing is unreliable. A simple knock could destroy a transistor. Jean Hoerni, a physicist at Fairchild, invents the planar process, adding a protective layer to transistors. This dramatically increases durability and scalability. Fairchild holds the patent, forcing anyone making transistors to pay them, and so their profits soar even higher.

Integrated Circuits

Despite success, transistors are limited because each one performs a single task and scaling becomes impossible. Robert Noyce creates the integrated circuit, allowing transistors to operate together on a single chip. Though Jack Kilby proposed a similar idea earlier, Noyce’s version proves practical. This invention creates an entirely new industry. Former Fairchild employees leave to form new companies, later called the “Fairchildren,” including Intel, AMD, and LSI Logic. Over 90 percent of today’s computing power can be traced back to Fairchild.

conclusion

To be continued ..